-

-

-

- User interface versions

- Building blocks for user interface design

- Adding styles with a css

- Surrounding page

- Changing snippets

- Creating a custom login page (pre 3.4)

- Creating a custom login page

- Using velocity templates within the blueprint

- Create your own web pages

- HTML delivery requirements

- How to customize system mails

-

-

- Introduction to security

- Secure development

- Security certification

- Field properties concerning security

- Developing user groups securely

- Security considerations for user interface

- Secure file organization

- Securely using the request

- Cross Site Scripting (XSS)

- Other options concerning secure development

- Security analysis

- Secure deployment

- Secure application management

- Scrambling of testdata

- Anonymization of personal data

- Using robots.txt

- Permission settings

- Security measures

- Data encryption

-

- Search Engine Optimization

- OTP

- User Interface migration guide

- User account management

- Instructies voor implementatie van visueel editen van nieuwsbrieven

- Login as another user

- Support

- More information about moving to User Interface Version 4.0

- Standaard page layout

- Sections moved to layout

- Aanpassingen in release 2024-7

- Media library

- Aanpassingen in release 2024-10

- Analytics and Matomo

- Registration forms

- How to change names of classes and fields?

- Responsible Disclosure Policy

- How to upload a blob in Velocity?

- Aanpassingen in release 2024-2

- Instances

- Google Analytics

- Street and City helper (postcodecheck)

- Responsible disclosure-beleid

- Postcode check service (straat en huisnummer) kosten

- Expressions

- Regular Expression Reference

-

Using robots.txt

Developers can make their own robots.txt file and add it to the application to control how web crawling bots access their site.

It is important to note that the robots.txt file is always publicly available. Take care not to expose sensitive information when adding paths to the robots.txt file.

It is also important to note that bots aren't compelled to use the robots.txt file, and as such it is not a guaranteed security measure against bots.

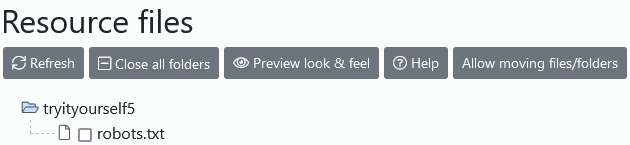

Making a robots.txt file

The robots.txt should be added to the root directory of the file manager:

Inside the file, entries should be added with the following layout:

User-agent :

Allow :

Disallow :

After 'User-agent', enter the name of the robot.

After 'Allow' and 'Disallow' lines, add the paths that are allowed and disallowed. If paths aren't disallowed, they are implicitely allowed.

In all three lines, wildcards can be used.

Examples

In the following example the Google search bot is allowed access to all paths except for those starting with /private/. This is because all paths that aren't disallowed are implicitely allowed.

User-agent : Googlebot

Disallow : /private/

In this example all bots are disallowed every path except for /public/example.gif:

User-agent : *

Disallow : /

Allow : /public/example.gif

In this example all bots are allowed paths starting with /public/ but disallows access to paths ending with .gif:

User-agent : Googlebot

Disallow : *.gif

Allow : /public/

In this example Googlebot is allowed every path except for those starting with /private/, while Spambot is disallowed every path

User-agent : Googlebot

Disallow : /private

User-agent : SpamBot

Disallow : /